Until now we have seen how replication helps in scaling a read-intensive workload. We keep one or more nodes as leaders that handle write traffic and have multiple replica nodes to handle read traffic. One assumption under which replication works is that the complete database will fit on one node. Replication won’t work if the dataset that we are working on doesn’t fit on a single node. If your application is required to store data about the users which can be as simple as user login information to event metadata about users interaction with the application for analytical purposes, storage that you use is bound to reach its capacity with time.

You can choose invest in upgrading the data capacity of the node but the cost of upgrading multiplies with the number of nodes in our cluster. So if the cost of upgrading is $100 and our leader-follower system has 1 leader and 5 followers then we need to write a check for $600 ($100 for leader and $100 for each of the 5 followers). We can clearly see that this approach is not going to be cost effective in the long run. So when we see our dataset overflowing the limit of server capacity and upgrading it doesn’t seem to be a profitable option then we can start thinking about partitioning.

Note that partitioning shouldn’t be implemented from day one unless absolutely necessary. Like all the other tools in distributed computing partitioning is not a silver bullet. It comes with its own set of challenges and requires a huge amount of engineering efforts to make it work correctly for an application. If your application can work correctly by leveraging replication or upgrading the capacity of a node that comes within your budget then prefer that over partitioning. Always remember that writing a blog post describing how you performed partitioning using fancy approaches on your Rust/Decentralized/<Insert more tech buzzwords> web-service doesn’t sound cool if you are fire-fighting everyday during a service outage.

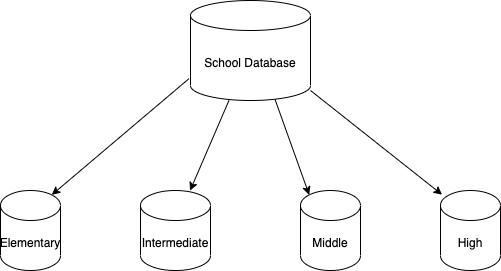

Partitioning as it sounds like is dividing your datasets into multiple smaller datasets so that each of them can operate like an individual and independent storage. Each partition can be scaled individually based upon the traffic load it is seeing. For example if a service allows us to query results for a nationwide test. If we partition our database based on the levels in a school i.e. elementary, intermediate, middle, high then we will know the dates on which results for these levels will be released. Accordingly we can scale the partition based on the number of students enrolled in the level. There is a pretty low probability that we will see high query traffic for elementary partitions on the day when results for middle school level are announced.

So whenever we encounter high request traffic for a particular partition, we can add more nodes for that partition to handle the traffic. Partitioning data based on certain logical criteria also provides us with information on the traffic load that is expected by each partition. This also helps in building an alert system that is triggered if the traffic load is not as per our expectation and service failure can be detected quickly. For example if we own a checkout service that is part of a food-delivery application then we can expect high load over a Friday evening for users when everybody starts their weekend as compared to other days. Our application should be better prepared to handle the traffic based on prior metrics and should be able to alert if it doesn’t ends up serving traffic up to a certain threshold. So if we are not seeing 1.5x traffic on a Friday evening for users that are based out in cities such as New York as compared to other days, then that means there might be some other issue in the application due to which requests are not reaching the checkout service for users that are part of the New York partition.

Partitioning can also become a bottleneck if our application requires cross-partition querying. Example let us consider that we partitioned our database based on which standard a student studies in. But now our application is required to provide a list of students whose name starts with A regardless of which standard they study in. To fulfill this request we will have to query all partitions and aggregate their results. These partitions can be part of separate node clusters or even separate data centers which in turn will degrade the performance of our query.

Hence before partitioning we need to brainstorm about how our data model looks and what kind of access patterns are we supporting or will be required to support as part of our service. Focussing on clean architectures and decoupled systems from the point where you don’t actually require partitioning will help you a lot in onboarding partitioning with minimal changes.