Ensuring that each resource is accessed and modified by a single user at a time is very complex on the application layer. This is why databases have built a lot of standard functionality that application developers can leverage to handle concurrent users. One such functionality is transaction isolation. This determines the visibility level of changes done by one transaction to another transaction.

Sadly maintaining serializability is not so straightforward in transaction isolation. By serializability we mean that if user_one changes value of resource A from 1 to 2 and user_two changes value of A from 1 to 3 then it becomes difficult for data storage applications to maintain an order in which the resource is changed. The final value can end up becoming either 2 or 3 based on the order in which data store processes these transactions.

This responsibility is shifted to the application layer in the majority of the cases and data storage provides a weaker level of isolation. When we are working with data stores that provide weaker isolation levels then we need to tackle concurrency bugs that creep in by using these stores on the application layer. Few of storage applications do support strong isolation levels and provide functionality for serializable transactions. Example of one such store is OceanStore

As part of the next few posts we will explore these isolation levels and challenges they present in a highly concurrent system. In this post we start by exploring read committed isolation level. This isolation level ensures that:

No Dirty Reads

If a transaction has performed some write operations as part of a transaction but not committed the transaction or aborted it in future, does it make sense for other transactions to read this uncommitted data? If they are able to read this data then it is considered as dirty read.

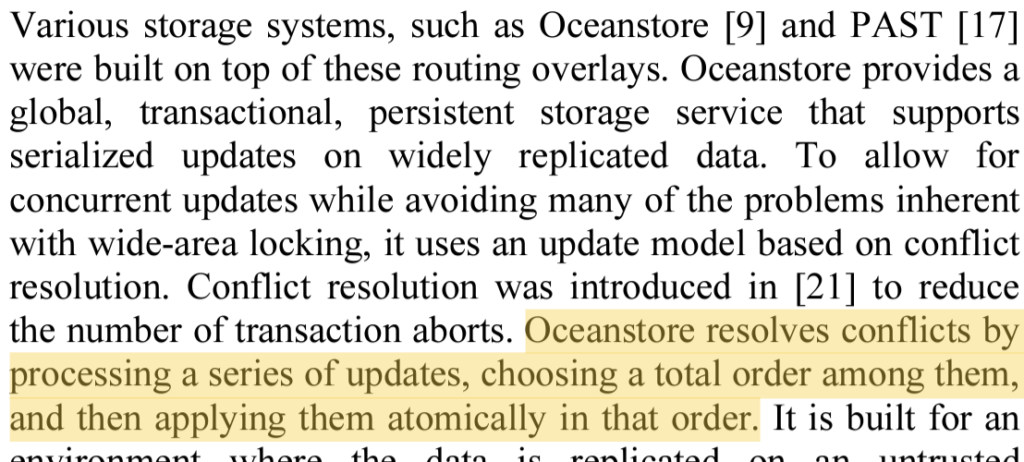

When reading from the database with read committed isolation level, we will only see that data which has been committed. Hence we overcome the problem of dirty reads that we saw as part of our last post. This is achieved as below:

Here, updates that are done as part of transaction initiated by Client1 is not visible to Client2 until the transaction is committed. Once Client1 commits the transaction, Client2 is able to see the updated value. This ensures that Client2 doesn’t ends up reading a value which was never part of database in case Client1 decides to abort the transaction after the update.

No Dirty Writes

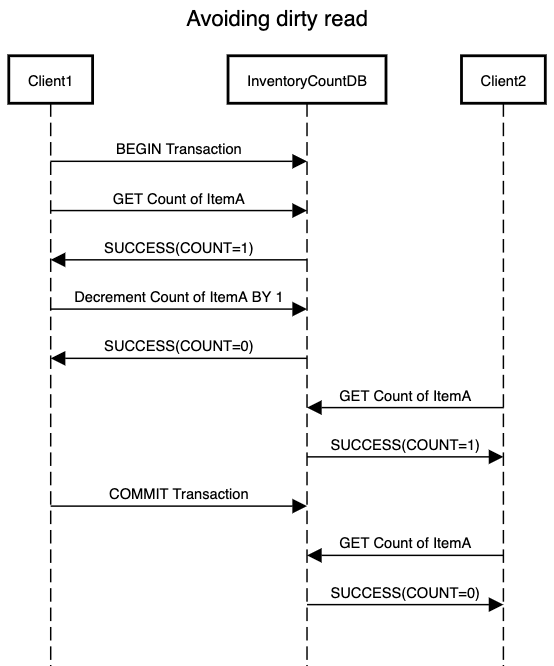

When database receives concurrent requests to update a resource, we have an assumption that last write wins. For example a counter. If each update to this record increments the counter by one then we can be rest assured that no matter in what order the request is processed, we will get the correct value. Example if current counter is 1 then two requests T1 & T2 acting concurrently can update the value in any order. Either T1 can change it from 1 to 2 followed by T2 changing it from 2 to 3 or vice versa. We will always end up with a value of 3.

But what happens if this update is a part of a transaction which ends up getting aborted. Assuming T1 gets aborted, we will still end up with a value of 3 for the counter which is incorrect.

When writing to the database we will only write to data that has been already committed. This solves the problem of dirty writes. This simply means that a resource which is being updated by one transaction will be locked until the transaction is either committed or aborted.

Although Read committed avoids dirty writes, it works for special cases where last write-wins ends up in valid state as described above. This works for a record type that has a predictable state like a counter which is more of an anomaly than a norm in most use cases.

For read committed isolation level, acquiring a lock for write on row being updated might solve the issue dirty writes but the same approach doesn’t works in case of read requests. Acquiring a lock for read request will block the client and will end up becoming a performance bottleneck. Hence another approach is to store the value of record before the transaction began and provide it to clients that query for record in middle of transaction. This ensures that the read requests are not blocked in case of concurrent transactions.

Many of the mainstream databases use read committed isolation level. It is the default isolation level in PostgreSQL. Although read committed might not be sufficient to tackle all the issues that can arise due to concurrent transactions. In next post we will look at what scenarios does it misses out on and how Snapshot isolation covers the missing gaps.

One thought on “Transactions: Read committed isolation”

Comments are closed.